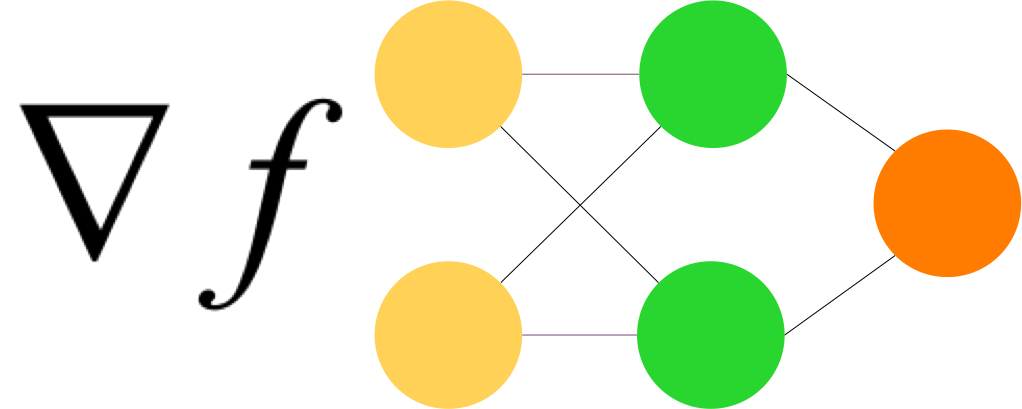

D’après TensorFlow :

To recap: here are the most common ways to prevent overfitting in neural networks:

- Get more training data.

- Reduce the capacity of the network.

- Add weight regularization.

- Add dropout.

Two important approaches not covered in this guide are:

- data-augmentation

- batch normalization

Remember that each method can help on its own, but often combining them can be even more effective.

TensorFlow a sur GCP un notebook intéressant qui démontre l’intérêt du weight decay (L2) et du dropout sur le jeu de données Higgs.