Le tutoriel de référence pour cette première partie concernant NLP est le cours en ligne : Natural Language Processing in TensorFlow de Laurence Moroney (Google Brain) présenté en semaine #2 de la formation.

Le même code est utilisé pour divers tutoriels.

Le jeu de données de cet tutoriel est imdb_reviews.

We provide a set of 25,000 highly polar movie reviews for training, and 25,000 for testing

Ces données, réparties en 2 lots de 25 000 enregistrements (training/test) sont des critiques (binaires) de films (policiers) d’avant 2011.

Le but de l’exercice est de classer un film à partir des commentaires apportés.

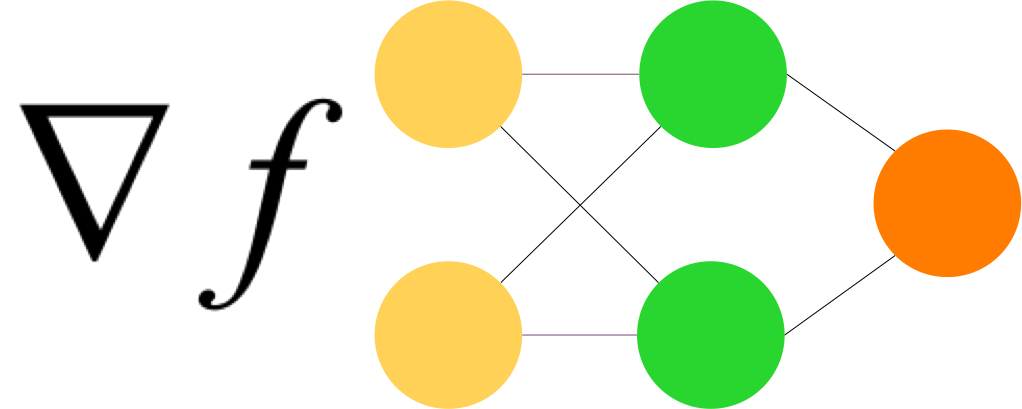

Le principe est simple, tout d’abord on crée un lexique (à partir du jeu de données), on tokenise (pour tous les enregistrements, on remplace chaque mot par le nombre qui lui est associé dans le lexique), on fait du padding sur les séquences pour les avoir toutes de la même longueur. Ensuite on décide de la dimension de l’embedding et on crée notre réseau. Le reste ressemble à ce qui a été vu précédemment.

import tensorflow as tf

print(tf.__version__)

2.2.0-rc3

import tensorflow_datasets as tfds

Lecture des données

imdb, info = tfds.load("imdb_reviews", with_info=True, as_supervised=False)

with_info=True permet d’obtenir les informations sur le jeu de données, as_supervised=False pour retourner un dictionary.

Downloading and preparing dataset imdb_reviews/plain_text/1.0.0 (download: 80.23 MiB, generated: Unknown size, total: 80.23 MiB) to /root/tensorflow_datasets/imdb_reviews/plain_text/1.0.0...

Dl Completed...: 100%

1/1 [00:09<00:00, 9.99s/ url]

Dl Size...: 100%

80/80 [00:09<00:00, 8.04 MiB/s]

Shuffling and writing examples to /root/tensorflow_datasets/imdb_reviews/plain_text/1.0.0.incomplete9U88OW/imdb_reviews-train.tfrecord

37%

9199/25000 [00:00<00:00, 91988.33 examples/s]

Shuffling and writing examples to /root/tensorflow_datasets/imdb_reviews/plain_text/1.0.0.incomplete9U88OW/imdb_reviews-test.tfrecord

30%

7391/25000 [00:00<00:00, 73900.20 examples/s]

Shuffling and writing examples to /root/tensorflow_datasets/imdb_reviews/plain_text/1.0.0.incomplete9U88OW/imdb_reviews-unsupervised.tfrecord

90%

44837/50000 [00:07<00:00, 54557.99 examples/s]

Dataset imdb_reviews downloaded and prepared to /root/tensorflow_datasets/imdb_reviews/plain_text/1.0.0. Subsequent calls will reuse this data.

info

tfds.core.DatasetInfo(

name='imdb_reviews',

version=1.0.0,

description='Large Movie Review Dataset.

This is a dataset for binary sentiment classification containing substantially more data than previous benchmark datasets. We provide a set of 25,000 highly polar movie reviews for training, and 25,000 for testing. There is additional unlabeled data for use as well.',

homepage='http://ai.stanford.edu/~amaas/data/sentiment/',

features=FeaturesDict({

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=2),

'text': Text(shape=(), dtype=tf.string),

}),

total_num_examples=100000,

splits={

'test': 25000,

'train': 25000,

'unsupervised': 50000,

},

supervised_keys=('text', 'label'),

citation="""@InProceedings{maas-EtAl:2011:ACL-HLT2011,

author = {Maas, Andrew L. and Daly, Raymond E. and Pham, Peter T. and Huang, Dan and Ng, Andrew Y. and Potts, Christopher},

title = {Learning Word Vectors for Sentiment Analysis},

booktitle = {Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies},

month = {June},

year = {2011},

address = {Portland, Oregon, USA},

publisher = {Association for Computational Linguistics},

pages = {142--150},

url = {http://www.aclweb.org/anthology/P11-1015}

}""",

redistribution_info=,

)Lecture avec as_supervised = True

imdb, info = tfds.load("imdb_reviews", with_info=True, as_supervised=True)

import numpy as np

train_data, test_data = imdb['train'], imdb['test']

training_sentences = []

training_labels = []

testing_sentences = []

testing_labels = []

for s,l in train_data:

training_sentences.append(str(s.numpy()))

training_labels.append(l.numpy())

for s,l in test_data:

testing_sentences.append(str(s.numpy()))

testing_labels.append(l.numpy())

training_labels_final = np.array(training_labels)

testing_labels_final = np.array(testing_labels)

Préparation des données

- vocab_size définit la taille du vocabulaire

- embedding_dim est commenté plus loin dans le notebook

- max_length est la longueur maximale autorisée pour un texte

- trunc_type sert à indiquer si on tronque avant ou après

- oov_tok est la chaîne de caractères pour out of vocabulary

vocab_size = 10000

embedding_dim = 16

max_length = 120

trunc_type='post'

oov_tok = "<OOV>"

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

tokenizer = Tokenizer(num_words = vocab_size, oov_token=oov_tok)

tokenizer.fit_on_texts(training_sentences)

word_index = tokenizer.word_index

len(word_index)

86539Regardons les données avec Pandas.

import pandas as pd

word_index_df=pd.DataFrame(word_index.items())

word_index_df.head(10)

0 1

0 <OOV> 1

1 the 2

2 and 3

3 a 4

4 of 5

5 to 6

6 is 7

7 br 8

8 in 9

9 it 10

sequences = tokenizer.texts_to_sequences(training_sentences)

sequences_df=pd.DataFrame(sequences)

sequences_df.head(5)

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 ... 2487 2488 2489 2490 2491 2492 2493 2494 2495 2496 2497 2498 2499 2500 2501 2502 2503 2504 2505 2506 2507 2508 2509 2510 2511 2512 2513 2514 2515 2516 2517 2518 2519 2520 2521 2522 2523 2524 2525 2526

0 59 12 14 35 439 400 18 174 29 1 9 33.0 1378.0 3401.0 42.0 496.0 1.0 197.0 25.0 88.0 156.0 19.0 12.0 211.0 340.0 29.0 70.0 248.0 213.0 9.0 486.0 62.0 70.0 88.0 116.0 99.0 24.0 5740.0 12.0 3317.0 ... NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN

1 256 28 78 585 6 815 2383 317 109 19 12 7.0 643.0 696.0 6.0 4.0 2249.0 5.0 183.0 599.0 68.0 1483.0 114.0 2289.0 3.0 4005.0 22.0 2.0 1.0 3.0 263.0 43.0 4754.0 4.0 173.0 190.0 22.0 12.0 4126.0 11.0 ... NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN

2 1 6175 2 1 4916 4029 9 4 912 1622 3 1969.0 1307.0 3.0 2384.0 8836.0 201.0 746.0 361.0 15.0 34.0 208.0 308.0 6.0 83.0 8.0 8.0 19.0 214.0 22.0 352.0 4.0 1.0 990.0 2.0 82.0 5.0 3608.0 545.0 1.0 ... NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN

3 360 7 2 239 5 20 16 4 8837 2705 2679 55.0 2.0 367.0 5.0 2.0 179.0 58.0 141.0 1419.0 17.0 94.0 203.0 980.0 15.0 23.0 1.0 86.0 4.0 193.0 3134.0 3069.0 3.0 1.0 16.0 4.0 383.0 5.0 640.0 395.0 ... NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN

4 3049 414 28 1058 31 2 370 13 141 2541 9 12.0 20.0 25.0 677.0 439.0 1517.0 2.0 115.0 54.0 1.0 287.0 2.0 1.0 5.0 2.0 674.0 1.0 55.0 347.0 25.0 187.0 34.0 182.0 6.0 29.0 7038.0 19.0 55.0 61.0 ... NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN

5 rows × 2527 columns

padded = pad_sequences(sequences,maxlen=max_length, truncating=trunc_type)

sequences_df=pd.DataFrame(padded)

sequences_df.head(5)

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 ... 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119

0 0 0 59 12 14 35 439 400 18 174 29 1 9 33 1378 3401 42 496 1 197 25 88 156 19 12 211 340 29 70 248 213 9 486 62 70 88 116 99 24 5740 ... 3401 14 163 19 4 1253 927 7986 9 4 18 13 14 4200 5 102 148 1237 11 240 692 13 44 25 101 39 12 7232 1 39 1378 1 52 409 11 99 1214 874 145 10

1 0 0 0 0 0 0 0 256 28 78 585 6 815 2383 317 109 19 12 7 643 696 6 4 2249 5 183 599 68 1483 114 2289 3 4005 22 2 1 3 263 43 4754 ... 11 200 28 1059 171 5 2 20 19 11 298 2 2182 5 10 3 285 43 477 6 602 5 94 203 1 206 102 148 4450 16 228 336 11 2510 392 12 20 32 31 47

2 1 6175 2 1 4916 4029 9 4 912 1622 3 1969 1307 3 2384 8836 201 746 361 15 34 208 308 6 83 8 8 19 214 22 352 4 1 990 2 82 5 3608 545 1 ... 2 3652 317 2 1 1835 3445 451 4030 3 1168 985 6 28 4091 3608 545 16 1 2 2297 2430 16 2 299 1357 1259 8 8 2297 803 29 2871 16 4 1 3028 564 5 746

3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 360 7 2 239 5 20 16 4 8837 2705 ... 2 115 376 44 25 61 1 6 1681 61 1846 4127 43 4 2289 3 1963 1 145 159 784 113 32 94 120 4 215 20 9 175 282 3 30 13 1027 2 2846 10 2020 47

4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3049 414 ... 187 34 421 2 1 5 4 2436 281 154 430 3 2 430 469 4 129 68 713 75 144 31 29 37 2071 32 12 568 27 95 212 57 2 3184 6 6665 26 284 119 47

5 rows × 120 columnsModèle

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size, embedding_dim, input_length=max_length),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(6, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy',optimizer='adam',metrics=['accuracy'])

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, 120, 16) 160000

_________________________________________________________________

flatten (Flatten) (None, 1920) 0

_________________________________________________________________

dense (Dense) (None, 6) 11526

_________________________________________________________________

dense_1 (Dense) (None, 1) 7

=================================================================

Total params: 171,533

Trainable params: 171,533

Non-trainable params: 0

_________________________________________________________________

num_epochs = 10

model.fit(padded, training_labels_final, epochs=num_epochs, validation_data=(testing_padded, testing_labels_final))

Epoch 1/10

782/782 [==============================] - 7s 9ms/step - loss: 0.4882 - accuracy: 0.7537 - val_loss: 0.3452 - val_accuracy: 0.8480

Epoch 2/10

782/782 [==============================] - 7s 8ms/step - loss: 0.2444 - accuracy: 0.9032 - val_loss: 0.3724 - val_accuracy: 0.8376

Epoch 3/10

782/782 [==============================] - 7s 9ms/step - loss: 0.1054 - accuracy: 0.9710 - val_loss: 0.4594 - val_accuracy: 0.8211

Epoch 4/10

782/782 [==============================] - 7s 9ms/step - loss: 0.0276 - accuracy: 0.9963 - val_loss: 0.5343 - val_accuracy: 0.8233

Epoch 5/10

782/782 [==============================] - 7s 9ms/step - loss: 0.0065 - accuracy: 0.9996 - val_loss: 0.6053 - val_accuracy: 0.8230

Epoch 6/10

782/782 [==============================] - 7s 8ms/step - loss: 0.0021 - accuracy: 1.0000 - val_loss: 0.6627 - val_accuracy: 0.8234

Epoch 7/10

782/782 [==============================] - 7s 8ms/step - loss: 9.7176e-04 - accuracy: 1.0000 - val_loss: 0.6994 - val_accuracy: 0.8262

Epoch 8/10

782/782 [==============================] - 7s 9ms/step - loss: 5.1851e-04 - accuracy: 1.0000 - val_loss: 0.7461 - val_accuracy: 0.8256

Epoch 9/10

782/782 [==============================] - 7s 8ms/step - loss: 2.9132e-04 - accuracy: 1.0000 - val_loss: 0.7815 - val_accuracy: 0.8262

Epoch 10/10

782/782 [==============================] - 7s 8ms/step - loss: 1.7517e-04 - accuracy: 1.0000 - val_loss: 0.8207 - val_accuracy: 0.8258

<tensorflow.python.keras.callbacks.History at 0x7f8dbcc1db38>

e = model.layers[0]

weights = e.get_weights()[0]

print(weights.shape) # shape: (vocab_size, embedding_dim)

(10000, 16)