Que ce soit binary ou multi-class categorization, le problème ne change pas fondamentalement.

L’exemple donné est celui de la classification de textes de la BBC en 5 catégories différentes ‘tech’, ‘business’, ‘sport’, ‘entertainment’, ‘politics’.

import csv

import tensorflow as tf

import numpy as np

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

!wget --no-check-certificate \

https://storage.googleapis.com/laurencemoroney-blog.appspot.com/bbc-text.csv \

-O /tmp/bbc-text.csv

--2020-05-15 13:32:57-- https://storage.googleapis.com/laurencemoroney-blog.appspot.com/bbc-text.csv

Resolving storage.googleapis.com (storage.googleapis.com)... 74.125.195.128, 2607:f8b0:400e:c05::80

Connecting to storage.googleapis.com (storage.googleapis.com)|74.125.195.128|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 5057493 (4.8M) [application/octet-stream]

Saving to: ‘/tmp/bbc-text.csv’

/tmp/bbc-text.csv 100%[===================>] 4.82M --.-KB/s in 0.03s

2020-05-15 13:32:57 (161 MB/s) - ‘/tmp/bbc-text.csv’ saved [5057493/5057493]

vocab_size = 10000

embedding_dim = 16

max_length = 250

trunc_type = 'post'

padding_type = 'post'

oov_tok = '<OOV>'

training_portion = 0.8

Ce qu’il y a de nouveau dans cet exemple c’est l’ajout de stopwords, des mots qui sont ignorés.

sentences = []

labels = []

stopwords = [ "a", "about", "above", "after", "again", "against", "all", "am", "an", "and", "any", "are", "as", "at", "be", "because", "been", "before", "being", "below", "between", "both", "but", "by", "could", "did", "do", "does", "doing", "down", "during", "each", "few", "for", "from", "further", "had", "has", "have", "having", "he", "he'd", "he'll", "he's", "her", "here", "here's", "hers", "herself", "him", "himself", "his", "how", "how's", "i", "i'd", "i'll", "i'm", "i've", "if", "in", "into", "is", "it", "it's", "its", "itself", "let's", "me", "more", "most", "my", "myself", "nor", "of", "on", "once", "only", "or", "other", "ought", "our", "ours", "ourselves", "out", "over", "own", "same", "she", "she'd", "she'll", "she's", "should", "so", "some", "such", "than", "that", "that's", "the", "their", "theirs", "them", "themselves", "then", "there", "there's", "these", "they", "they'd", "they'll", "they're", "they've", "this", "those", "through", "to", "too", "under", "until", "up", "very", "was", "we", "we'd", "we'll", "we're", "we've", "were", "what", "what's", "when", "when's", "where", "where's", "which", "while", "who", "who's", "whom", "why", "why's", "with", "would", "you", "you'd", "you'll", "you're", "you've", "your", "yours", "yourself", "yourselves" ]

print(len(stopwords))

with open("/tmp/bbc-text.csv", 'r') as csvfile:

reader = csv.reader(csvfile, delimiter=',')

next(reader)

for row in reader:

labels.append(row[0])

sentence = row[1]

for word in stopwords:

token = " " + word + " "

sentence = sentence.replace(token, " ")

sentences.append(sentence)

print(len(labels))

print(len(sentences))

print(sentences[0])

2225

2225

tv future hands viewers home theatre systems plasma high-definition tvs digital video recorders moving living room ...

import pandas as pd

dfSentences=pd.DataFrame(sentences)

dfSentences.head()

0

0 tv future hands viewers home theatre systems ...

1 worldcom boss left books alone former worldc...

2 tigers wary farrell gamble leicester say wil...

3 yeading face newcastle fa cup premiership side...

4 ocean s twelve raids box office ocean s twelve...

dfSentences.describe()

0

count 2225

unique 2123

top kennedy questions trust blair lib dem leader c...

freq 2

dfLabels=pd.DataFrame(labels)

dfLabels[0].unique()

array(['tech', 'business', 'sport', 'entertainment', 'politics'],

dtype=object)

dfLabels.head(10)

0

0 tech

1 business

2 sport

3 sport

4 entertainment

5 politics

6 politics

7 sport

8 sport

9 entertainment

train_size = int(training_portion * len(labels))

train_sentences = sentences[:train_size]

train_labels = labels[:train_size]

validation_sentences = sentences[train_size:]

validation_labels = labels[train_size:]

print(train_size)

print(len(train_sentences))

print(len(train_labels))

print(len(validation_sentences))

print(len(validation_labels))

1780

1780

1780

445

445

tokenizer = Tokenizer(num_words = vocab_size, oov_token = oov_tok)

tokenizer.fit_on_texts(train_sentences)

word_index = tokenizer.word_index

train_sequences = tokenizer.texts_to_sequences(train_sentences)

train_padded = pad_sequences(train_sequences, padding=padding_type, maxlen=max_length)

print(len(train_sequences[0]))

print(len(train_padded[0]))

print(len(train_sequences[1]))

print(len(train_padded[1]))

print(len(train_sequences[10]))

print(len(train_padded[10]))

449

250

200

250

192

250

validation_sequences = tokenizer.texts_to_sequences(validation_sentences)

validation_padded = pad_sequences(validation_sequences, padding = padding_type, maxlen=max_length)

print(len(validation_labels))

print(validation_padded.shape)

445

(445, 250)

label_tokenizer = Tokenizer()

label_tokenizer.fit_on_texts(labels)

training_label_seq = np.array(label_tokenizer.texts_to_sequences(train_labels))

validation_label_seq = np.array(label_tokenizer.texts_to_sequences(validation_labels))

label_tokenizer.index_word

{1: 'sport', 2: 'business', 3: 'politics', 4: 'tech', 5: 'entertainment'}

print(training_label_seq[0])

print(training_label_seq[1])

print(training_label_seq[2])

print(training_label_seq.shape)

print(validation_label_seq[0])

print(validation_label_seq[1])

print(validation_label_seq[2])

print(validation_label_seq.shape)

[4]

[2]

[1]

(1780, 1)

[5]

[4]

[3]

(445, 1)

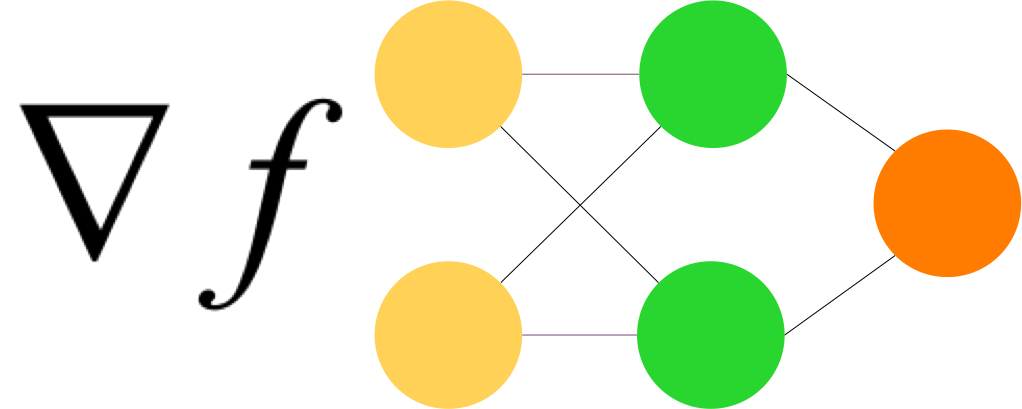

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size, embedding_dim, input_length=max_length),

tf.keras.layers.GlobalAveragePooling1D(),

tf.keras.layers.Dense(24, activation='relu'),

tf.keras.layers.Dense(6, activation='softmax')

])

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

Model: "sequential_5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_5 (Embedding) (None, 250, 16) 160000

_________________________________________________________________

global_average_pooling1d_5 ( (None, 16) 0

_________________________________________________________________

dense_10 (Dense) (None, 24) 408

_________________________________________________________________

dense_11 (Dense) (None, 6) 150

=================================================================

Total params: 160,558

Trainable params: 160,558

Non-trainable params: 0

_________________________________________________________________

num_epochs = 20

history = model.fit(train_padded, training_label_seq, epochs = num_epochs, validation_data=(validation_padded, validation_label_seq), verbose=2)

Il y a du sur-apprentissage, un problème que nous étudierons plus tard.

Epoch 1/20

56/56 - 0s - loss: 1.7662 - accuracy: 0.2551 - val_loss: 1.7294 - val_accuracy: 0.2966

Epoch 2/20

56/56 - 0s - loss: 1.6599 - accuracy: 0.4000 - val_loss: 1.5865 - val_accuracy: 0.4157

Epoch 3/20

56/56 - 0s - loss: 1.4570 - accuracy: 0.5652 - val_loss: 1.3540 - val_accuracy: 0.6315

Epoch 4/20

56/56 - 0s - loss: 1.1777 - accuracy: 0.7034 - val_loss: 1.0757 - val_accuracy: 0.7281

Epoch 5/20

56/56 - 0s - loss: 0.8998 - accuracy: 0.8197 - val_loss: 0.8481 - val_accuracy: 0.8315

Epoch 6/20

56/56 - 0s - loss: 0.6894 - accuracy: 0.8871 - val_loss: 0.6863 - val_accuracy: 0.8652

Epoch 7/20

56/56 - 0s - loss: 0.5346 - accuracy: 0.9354 - val_loss: 0.5708 - val_accuracy: 0.8697

Epoch 8/20

56/56 - 0s - loss: 0.4141 - accuracy: 0.9478 - val_loss: 0.4696 - val_accuracy: 0.9056

Epoch 9/20

56/56 - 0s - loss: 0.3157 - accuracy: 0.9697 - val_loss: 0.3930 - val_accuracy: 0.9258

Epoch 10/20

56/56 - 0s - loss: 0.2396 - accuracy: 0.9820 - val_loss: 0.3326 - val_accuracy: 0.9393

Epoch 11/20

56/56 - 0s - loss: 0.1816 - accuracy: 0.9888 - val_loss: 0.2854 - val_accuracy: 0.9461

Epoch 12/20

56/56 - 0s - loss: 0.1398 - accuracy: 0.9927 - val_loss: 0.2518 - val_accuracy: 0.9528

Epoch 13/20

56/56 - 0s - loss: 0.1093 - accuracy: 0.9949 - val_loss: 0.2289 - val_accuracy: 0.9528

Epoch 14/20

56/56 - 0s - loss: 0.0870 - accuracy: 0.9961 - val_loss: 0.2101 - val_accuracy: 0.9528

Epoch 15/20

56/56 - 0s - loss: 0.0702 - accuracy: 0.9966 - val_loss: 0.1969 - val_accuracy: 0.9551

Epoch 16/20

56/56 - 0s - loss: 0.0574 - accuracy: 0.9978 - val_loss: 0.1851 - val_accuracy: 0.9551

Epoch 17/20

56/56 - 0s - loss: 0.0473 - accuracy: 0.9994 - val_loss: 0.1778 - val_accuracy: 0.9551

Epoch 18/20

56/56 - 0s - loss: 0.0397 - accuracy: 0.9994 - val_loss: 0.1706 - val_accuracy: 0.9551

Epoch 19/20

56/56 - 0s - loss: 0.0335 - accuracy: 1.0000 - val_loss: 0.1630 - val_accuracy: 0.9528

Epoch 20/20

56/56 - 0s - loss: 0.0286 - accuracy: 1.0000 - val_loss: 0.1595 - val_accuracy: 0.9551